An Imperfect Guide to Imperfect Reproducibility

Gabriel Becker

Twitter: @groundwalkergmb, Github:@gmbecker

Intro

May Institute For Computational Proteomics 2019, Northeastern University

Who I Am

Gabe Becker

Twitter: @groundwalkergmb, GitHub: gmbecker

May Institute For Computational Proteomics 2019, Northeastern University

What I do

- Research reproducibility and related issues

- Contribute^ to the R language itself

^ I collaborate with, but am not a member of, the R-core development team.

May Institute For Computational Proteomics 2019, Northeastern University

Who I Was

Formerly Scientist at Genentech Research

Some content in this talk was developed (by me) while at, and is copyright Genentech, Inc. Used with permission.

May Institute For Computational Proteomics 2019, Northeastern University

What I'll Be Talking About

- Theory - mental models

- Reproducibility - what it does and doesn't give us

- Generalizing Reproducibility

- Practice - what you can do now to improve your work

May Institute For Computational Proteomics 2019, Northeastern University

I Wish Reproducibility Didn't Matter

May Institute For Computational Proteomics 2019, Northeastern University

So Many Things Would Already Be Solved

May Institute For Computational Proteomics 2019, Northeastern University

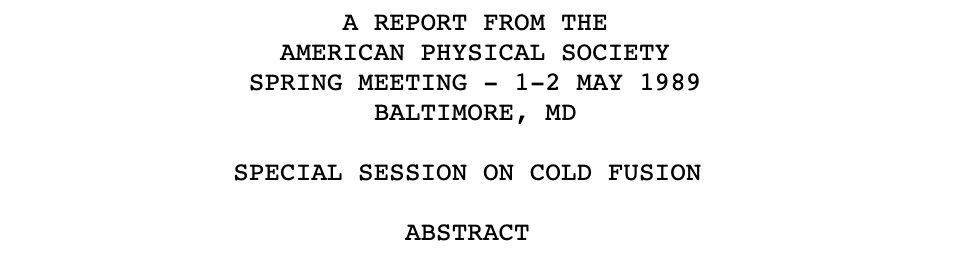

Energy (Cold Fusion)

May Institute For Computational Proteomics 2019, Northeastern University

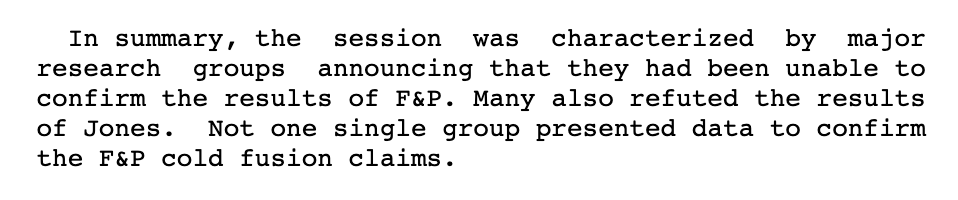

Personalized Cancer Treatment

May Institute For Computational Proteomics 2019, Northeastern University

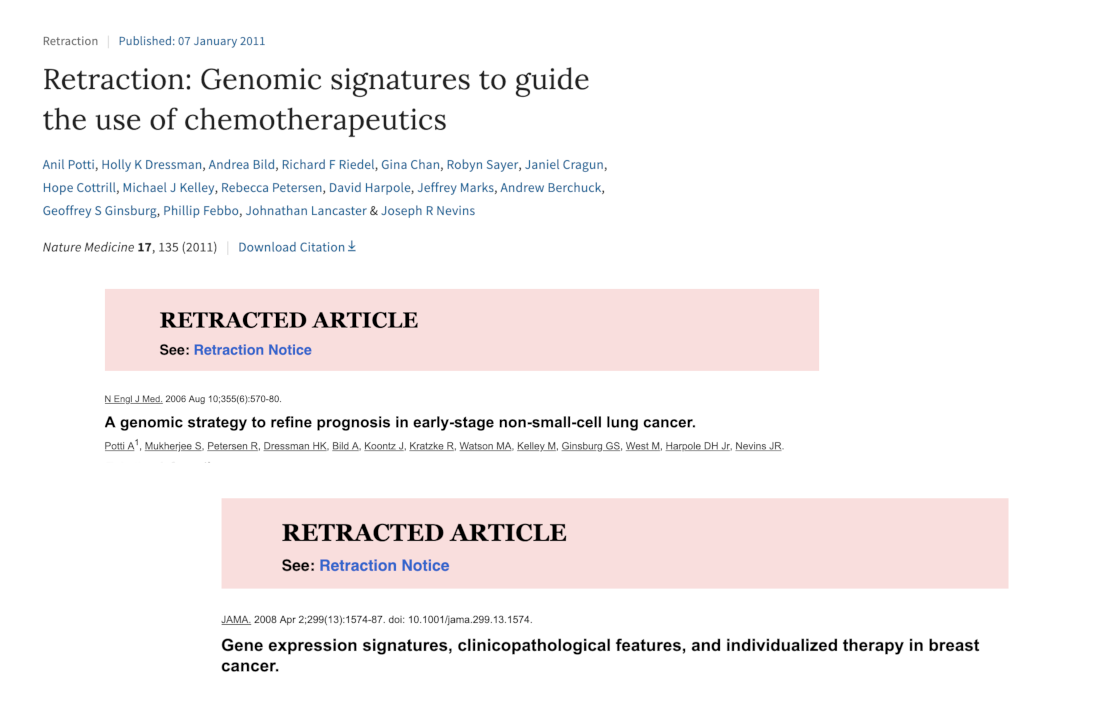

Economics

May Institute For Computational Proteomics 2019, Northeastern University

Aliens

May Institute For Computational Proteomics 2019, Northeastern University

But We're Stuck In The Real World

And none of those claims were true^

^To the best of our current knowledge

May Institute For Computational Proteomics 2019, Northeastern University

(Computational) Reproducibility Is Not The Point

May Institute For Computational Proteomics 2019, Northeastern University

Knowledge Management

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility

May Institute For Computational Proteomics 2019, Northeastern University

So To Recap

- The world would be better if all results were reproducible

- I don't really care if your results are reproducible

May Institute For Computational Proteomics 2019, Northeastern University

Seem Like A Contradiction?

May Institute For Computational Proteomics 2019, Northeastern University

What Do We Really Want?

May Institute For Computational Proteomics 2019, Northeastern University

Results Are No Use To Us If They're Not Useful

May Institute For Computational Proteomics 2019, Northeastern University

But How Does Reproducting a Result Make It Useful?

May Institute For Computational Proteomics 2019, Northeastern University

We Want

Results we can understand and feel confident using (Gavish)

- Incorporating them into our overall understanding

- Extending or directly utilizing them in our own work

- Talking about them at dinner parties

1 Gavish and Donoho, A Universal Identifier for Computational Results, Procedia Computer Science 4, 2011

May Institute For Computational Proteomics 2019, Northeastern University

Trust, Verification and Guarantees

May Institute For Computational Proteomics 2019, Northeastern University

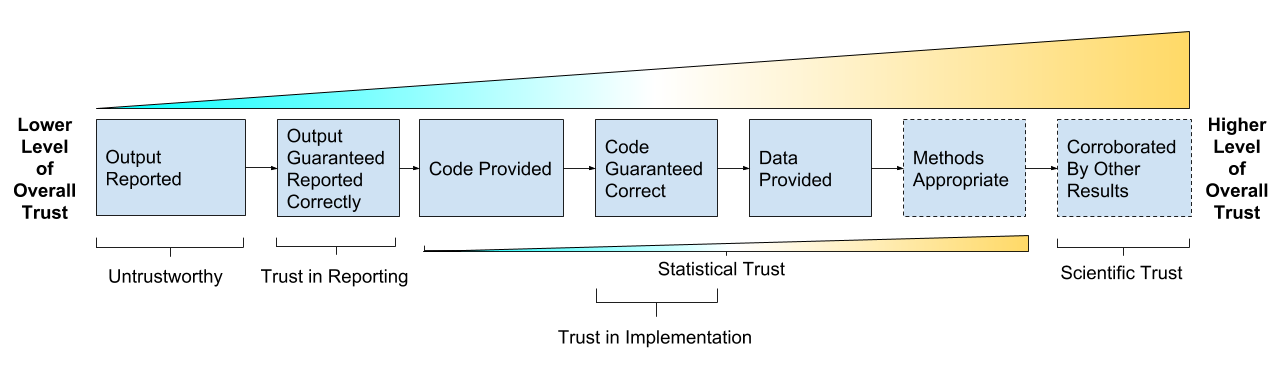

Ways We Can Trust Results

- Trust in Reporting - result is accurately reported

- Trust in Implementation - analysis code successfully implements chosen methods

- Statistical Trust - data and methods are (still) appropriate

- Scientific Trust - result convincingly supports claim(s) about underlying systems or truths

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility As A Trust Scale

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

What Does Strict Reproduction Prove?

It confirms^ the original analyst(s)

- Got the result they say they got

- By applying the code they provided

- To the input data they identified

- In the environment specified

And ensures you have the artifact itself

^ technically it does not confirm these in the deductive sense, rather proves them in the "beyond a reasonable doubt" sense.

May Institute For Computational Proteomics 2019, Northeastern University

A Brief Thought Experiment

May Institute For Computational Proteomics 2019, Northeastern University

Imagine an Independent, Trusted System Which

- Accepts code and data, and environment

- Runs the code

- Saves and publishes results and associates them with code and env

- Deletes the data (if necessary)

May Institute For Computational Proteomics 2019, Northeastern University

This Would Provide Guarantees

Very similar (at least) to those gained by manual, strict reproduction,

without requiring us to actually recreate the result at an arbitrary later date.

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility Isn't Everything - A Case Study

May Institute For Computational Proteomics 2019, Northeastern University

DESeq Paper

May Institute For Computational Proteomics 2019, Northeastern University

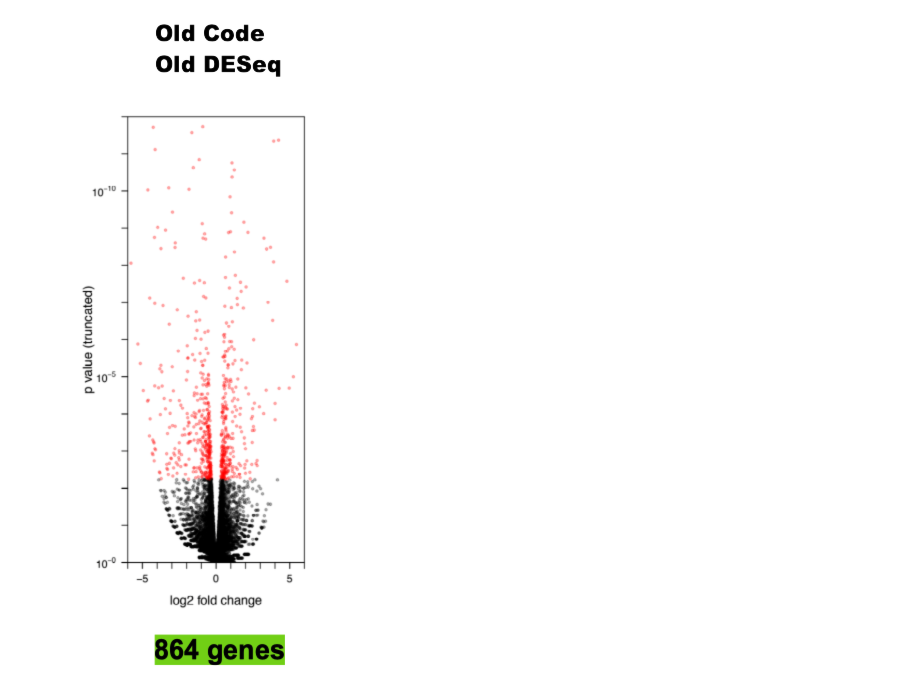

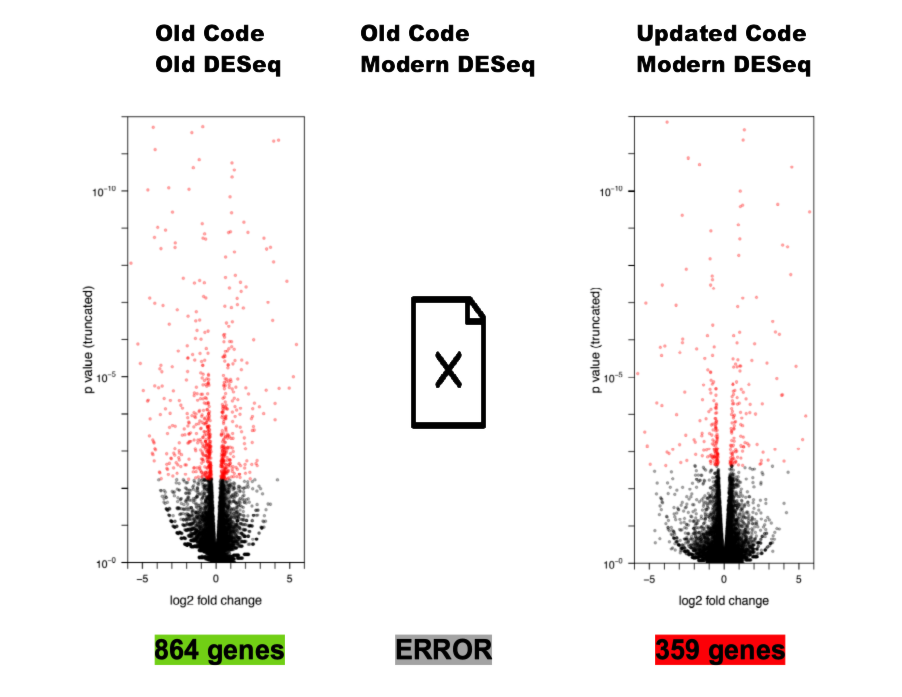

It's Reproducible!

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

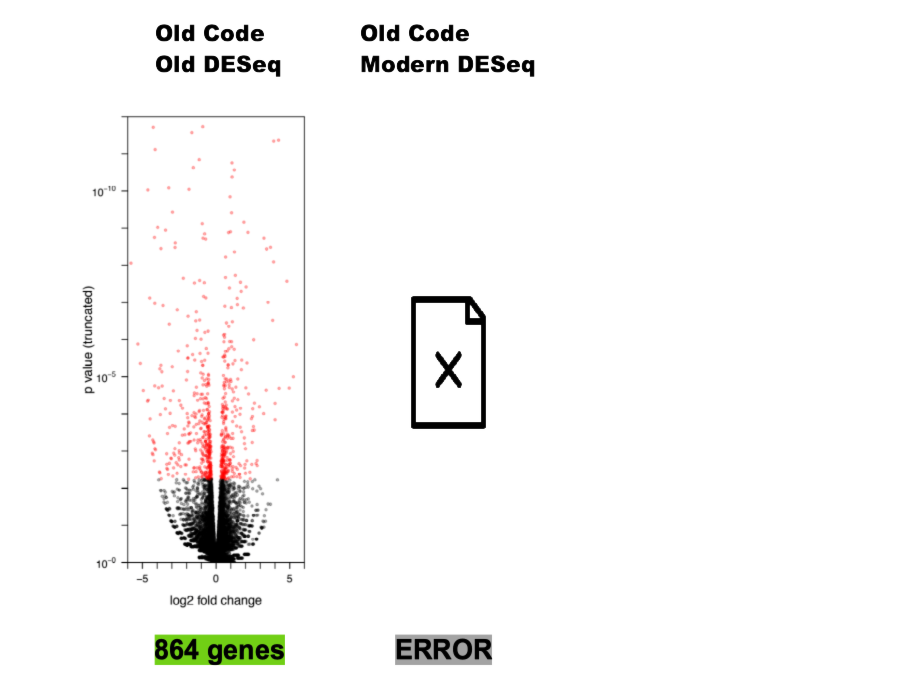

Not The Whole Story

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

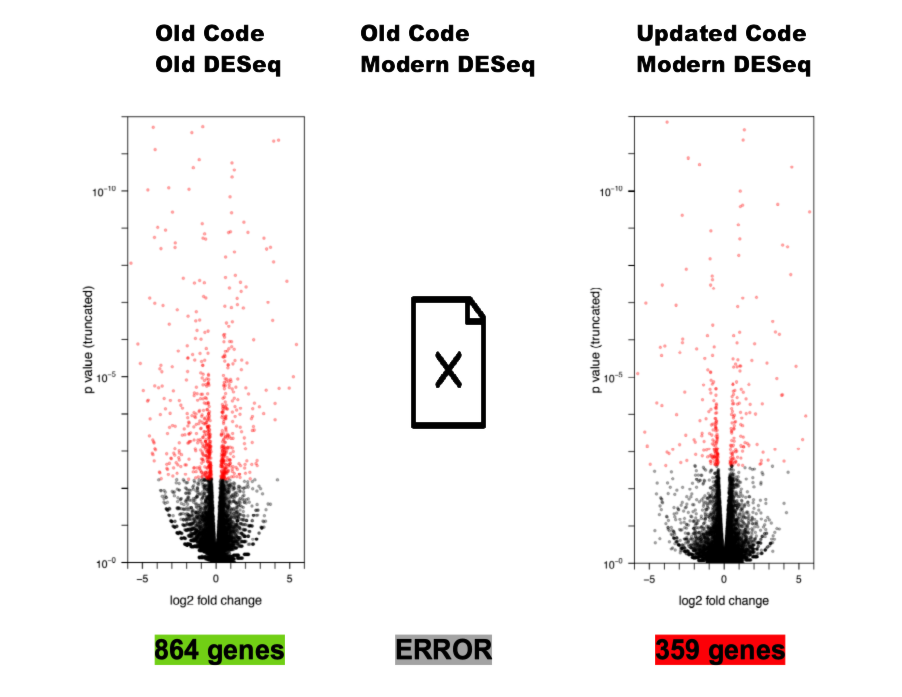

Really Not The Whole Story

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

May Institute For Computational Proteomics 2019, Northeastern University

Generalized Reproducibility

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility is great, but by itself it is neither as necessary nor as sufficient as many seem to think.

– Me (and, like, other smart people too probably)

May Institute For Computational Proteomics 2019, Northeastern University

Topics In Generalized Reproducibility

- Comparability

- Currency

- Completeness

- Provenance

May Institute For Computational Proteomics 2019, Northeastern University

Comparability

May Institute For Computational Proteomics 2019, Northeastern University

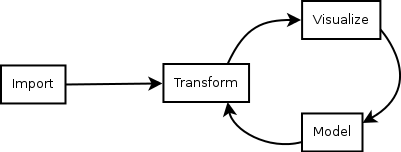

Individual "Data Science" Workflow

credit: Wickham

May Institute For Computational Proteomics 2019, Northeastern University

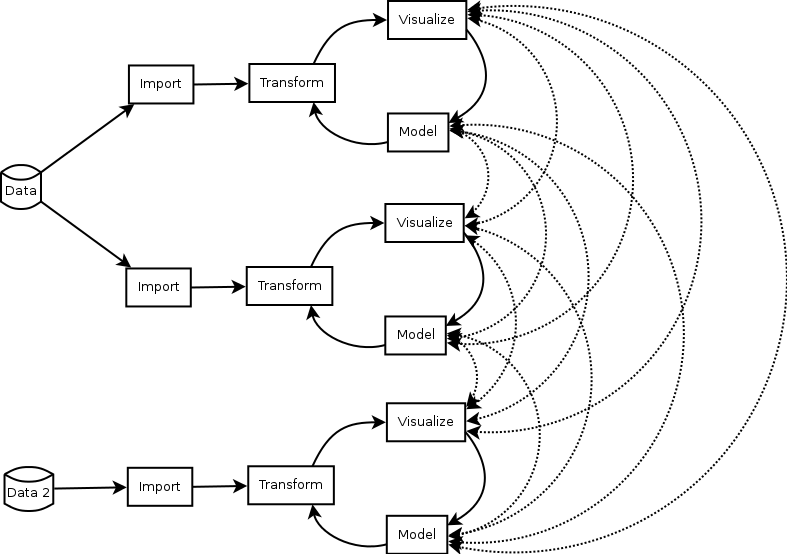

Collaboration Is Core

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

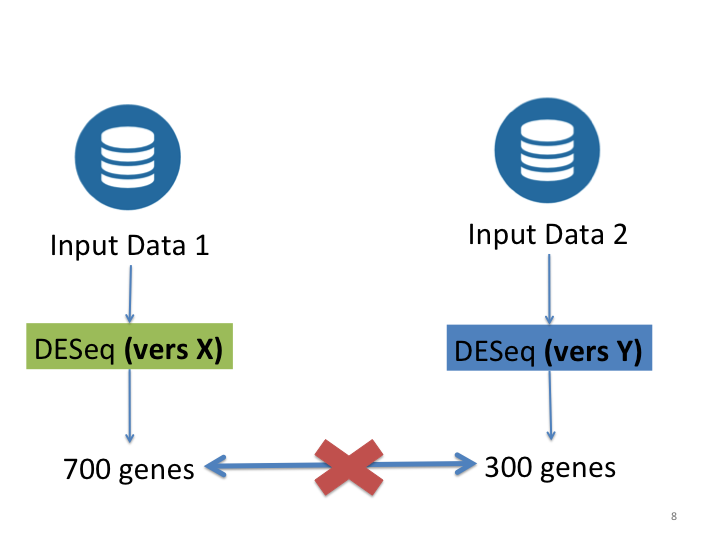

Different Versions

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

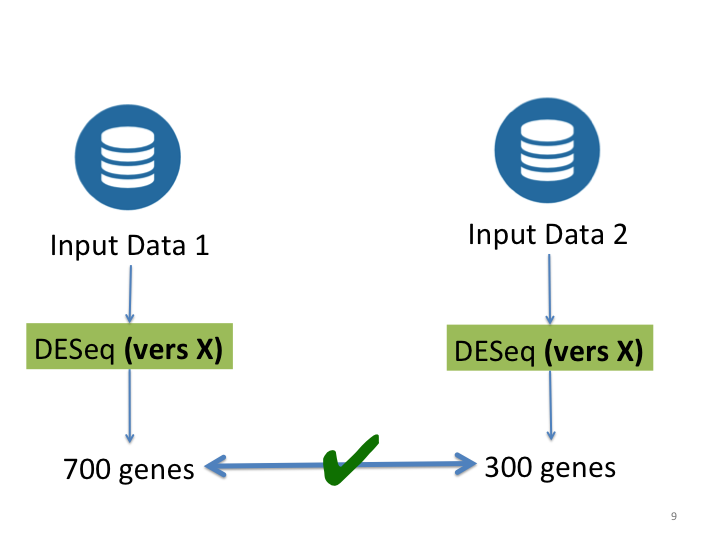

Same Versions

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

Currency

May Institute For Computational Proteomics 2019, Northeastern University

Recall

Source: Gabriel Becker, copyright Genentech Inc.

May Institute For Computational Proteomics 2019, Northeastern University

Bleeding Edge Methods

- Called this for a reason

- Continue to be refined

- Likely won't stay state of the art

- May not even remain valid

May Institute For Computational Proteomics 2019, Northeastern University

No Hard-And-Fast Rule, But Generally

- Modern versions take precedence

- skeptical of genes in the 864 but not the 359

- Modern methods take precedence^

^ By how much depends on why the old method fell out of favor

May Institute For Computational Proteomics 2019, Northeastern University

Note

The Currency concern is very real in Bioinformatics, Deep Learning, and other fast moving settings. Its not really a concern when using a "classical" method (GLMs, Random Forests, etc).

May Institute For Computational Proteomics 2019, Northeastern University

Completeness

May Institute For Computational Proteomics 2019, Northeastern University

Literate Statistical Practice

[The resulting document] should describe results and lessons learned … as well as a means to reproduce all steps, even those not used in a concise reconstruction, which were taken in the analysis.

– Rossini, Literate Statistical Practice (emphasis mine)

May Institute For Computational Proteomics 2019, Northeastern University

Translation

May Institute For Computational Proteomics 2019, Northeastern University

Provenance

May Institute For Computational Proteomics 2019, Northeastern University

Provenance

the source or origin of an object; its history and pedigree; a record of the ultimate derivation and passage of an item through its various owners

– Oxford English Dictionary

May Institute For Computational Proteomics 2019, Northeastern University

Knowing a Result's Provenance Can Help Us

- Gain insights into the reasoning used to create it,

- verify acceptable procedures and methods were used, and

- know how to reproduce it.

Paraphrase of Friere et al. in Provenance for Computational Tasks: A Survey, Computing in Science & Engineering. 2008

May Institute For Computational Proteomics 2019, Northeastern University

Result Provenance Includes Input Data Provenance

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility In Practice

May Institute For Computational Proteomics 2019, Northeastern University

In theory there is no difference between theory and practice; in practice there is.

– Unattributed

May Institute For Computational Proteomics 2019, Northeastern University

A "Slightly Out-of-Order" Reproduction Process

May Institute For Computational Proteomics 2019, Northeastern University

0. No Way To Get/Recreate Code and Data?

May Institute For Computational Proteomics 2019, Northeastern University

1. Works With Modern SW Versions

May Institute For Computational Proteomics 2019, Northeastern University

2. Try To Recreate Original Environment

May Institute For Computational Proteomics 2019, Northeastern University

3. Worked In 'Original' Env, We're Good!

May Institute For Computational Proteomics 2019, Northeastern University

4. But Modern Results Disagree?

May Institute For Computational Proteomics 2019, Northeastern University

5. What Now?

We can

- Take the modern results and move forward using them

- Apply other methods to look for corroboration

- Throw up our hands and switch to a field that uses established classical methods

May Institute For Computational Proteomics 2019, Northeastern University

Things You Can Do Now

May Institute For Computational Proteomics 2019, Northeastern University

Script

May Institute For Computational Proteomics 2019, Northeastern University

Scripted Analyses

May Institute For Computational Proteomics 2019, Northeastern University

Manual Steps

May Institute For Computational Proteomics 2019, Northeastern University

Which Would You Rather Be Tested On?

- How the chicken crossed the road, or

- How many licks it took

May Institute For Computational Proteomics 2019, Northeastern University

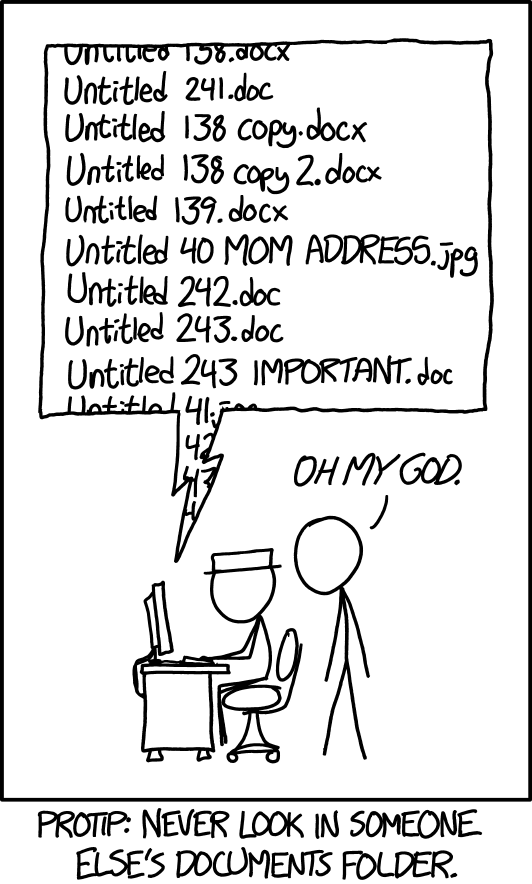

Versions and File Naming

May Institute For Computational Proteomics 2019, Northeastern University

May Institute For Computational Proteomics 2019, Northeastern University

Avoid Encoding Metadata Solely In Filenames

- Version

thesis_final_revised_final_v2.Rmd

- Species/Gene/etc

mydata_BRAF_mut_only.dat

- Timestamp

mydata_as_of_2018_03_03.dat

May Institute For Computational Proteomics 2019, Northeastern University

Version Control

May Institute For Computational Proteomics 2019, Northeastern University

Literate Analyses

May Institute For Computational Proteomics 2019, Northeastern University

Use rmarkdown, knitr, or Sweave

This gets trust in reporting taken care of^ right away, and its super easy.

^absent actual misconduct, ie editing output files manually

May Institute For Computational Proteomics 2019, Northeastern University

Want to Use Jupytr/Rmarkdown Notebooks?

You must go watch or read Joel Grus' fantastic talk first

Watch: https://www.youtube.com/watch?v=7jiPeIFXb6U

Read: https://docs.google.com/presentation/d/1n2RlMdmv1p25Xy5thJUhkKGvjtV-dkAIsUXP-AL4ffI/

May Institute For Computational Proteomics 2019, Northeastern University

3 Rules If You Still Want To Use Notebooks

- Always run them start to finish before publication

- Never fully trust output in a notebook that wasn't run start to finish

- Always. Run. Them. Start. To. Finish. Before. Publishing.

May Institute For Computational Proteomics 2019, Northeastern University

Sharing

May Institute For Computational Proteomics 2019, Northeastern University

Current State

May Institute For Computational Proteomics 2019, Northeastern University

Current State

Authors for only ~ 44% (36%, 50%) of papers in Science shared both code and data

May Institute For Computational Proteomics 2019, Northeastern University

Share Your Code!

- If it's right no one cares how ugly it is

- If it's wrong, readers deserve to know

- And you do, too

May Institute For Computational Proteomics 2019, Northeastern University

Share Your Data (If Ethically Allowable)

When to share data is a tricky question.

But answers of "never" and "only once its obsolete/irrelevant" make you the villain of the piece.

May Institute For Computational Proteomics 2019, Northeastern University

Publish Science

As if you, personally, will need to understand, evaluate, use, and extend your result in 5 years, working only from published materials, having lost all personal materials.

May Institute For Computational Proteomics 2019, Northeastern University

Environment Recreation

May Institute For Computational Proteomics 2019, Northeastern University

Environment Recreation

Crucial for both multi-analyst collaboration and for strict reproduction

Remember to think about currency

May Institute For Computational Proteomics 2019, Northeastern University

Recreating/Distributing R Package Libraries

- switchr

- packrat

- MRAN snapshots

May Institute For Computational Proteomics 2019, Northeastern University

Docker For Reproducibility

May Institute For Computational Proteomics 2019, Northeastern University

Its Easy

- Boettiger and Eddelbuettel, An Introduction to Rocker: Docker Containers for R, The R Journal, 2017

- See Also: Bioconductor AMIs http://bioconductor.org/help/bioconductor-cloud-ami/

May Institute For Computational Proteomics 2019, Northeastern University

Confession

May Institute For Computational Proteomics 2019, Northeastern University

mybinder.org

Check out http://mybinder.org

- Makes your code runnable by others

- Uses docker

- Careful of changing dep version in images across time

May Institute For Computational Proteomics 2019, Northeastern University

Provenance-lite

May Institute For Computational Proteomics 2019, Northeastern University

Aspire To

- Know where your data came from

- Pass that information along with you results (and code)

(Even if imperfectly)

May Institute For Computational Proteomics 2019, Northeastern University

Publishing Results

May Institute For Computational Proteomics 2019, Northeastern University

What To Include

- Code (+ detailed description of any manual steps)

- Data (if you can)

- Data Provenance (if you have it)

- Version/environment info

May Institute For Computational Proteomics 2019, Northeastern University

Consider Open Access When You Can

Not a simple issue, but ask yourself how can a result be useful to people who can't even read about it?

May Institute For Computational Proteomics 2019, Northeastern University

Wrap-up and Conclusions

May Institute For Computational Proteomics 2019, Northeastern University

May Institute For Computational Proteomics 2019, Northeastern University

Think About What you Actually Want

And whether/how you can

- Get it

- Provide it to your readers

May Institute For Computational Proteomics 2019, Northeastern University

Reproducibility is NOT Valueless

But it is most usefully viewed within a larger, more nuanced context.

May Institute For Computational Proteomics 2019, Northeastern University

Share Your Code and Data (If Possible)

And don't be assholes to others who do the same. Even when you find problems in it.

May Institute For Computational Proteomics 2019, Northeastern University

Publish Science

You'd be able to trust if it came out of a lab you don't know.

May Institute For Computational Proteomics 2019, Northeastern University

Selected (and Incomplete) Further Readings

May Institute For Computational Proteomics 2019, Northeastern University

Compendiums

Gentleman and Temple Lang, Statistical Analyses and Reproducible Research, Bioconductor Working Papers, 2014

Marwick, Boettiger and Mullen, Packaging Data Analytical Work Reproducibly Using R (and Friends), The American Statistician, 2018

May Institute For Computational Proteomics 2019, Northeastern University

FAIR data

Wilkinson et al., The FAIR Guiding Principles for scientific data management and stewardship, Scientific Data, 2016

https://www.force11.org/group/fairgroup/fairprinciples

Dunning, de Smaele and Böhmer, Are the FAIR Data Principles fair? International Journal of Digital Curation, 2017

May Institute For Computational Proteomics 2019, Northeastern University

Case Studies and Attempts

Marwick, Computational Reproducibility in Archaeological Research: Basic Principles and a Case Study of Their Implementation, Journal of Archaeological Method and Theory, 2016

FitzJohn, Pennell, Zanne and Cornwell, Reproducible research is still a challenge, ROpenSci Blog, 2014 https://ropensci.org/blog/2014/06/09/reproducibility/

ROpenSci, Reproducibility In Science, http://ropensci.github.io/reproducibility-guide/

May Institute For Computational Proteomics 2019, Northeastern University

Cultural Challenges/Barriers to Reproducibilty

Basically everything Victoria Stodden has ever published.

Seriously, just go read it (at least the abstracts)

May Institute For Computational Proteomics 2019, Northeastern University

Provenance(ish) In R

Becker, Moore and Lawrence, trackr: A Framework for Enhancing Discoverability and Reproducibility of Data Visualizations and Other Artifacts in R, Journal of Computational and Graphical Statistics, 2019

Biecek and Kosiński, archivist: An R Package for Managing, Recording and Restoring Data Analysis Results, Journal of Statistical Software, 2017

May Institute For Computational Proteomics 2019, Northeastern University

Reproducible Pipelines in R

Landau, The drake R package: a pipeline toolkit for reproducibility and high-performance computing. The Journal of Open Source Software, 2018

May Institute For Computational Proteomics 2019, Northeastern University